Data Breach Alert! How Microsoft’s GitHub Misstep Led to a 38TB Data Leak

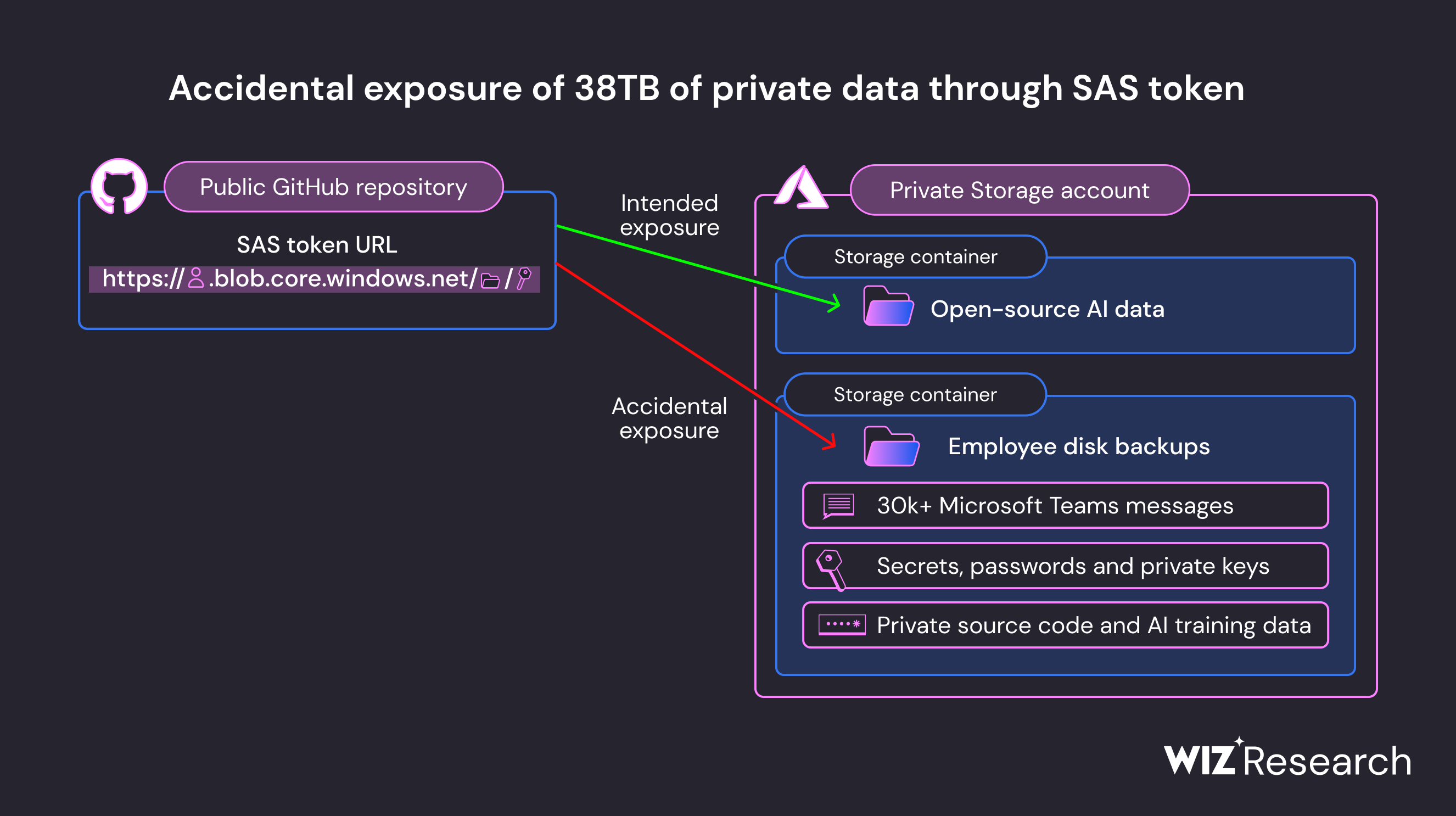

In a recent security breach, a Microsoft employee unintentionally shared open-source AI training data on GitHub, exposing a startling 38 terabytes of critical information. Cybersecurity specialists at Wiz Research found the leak, and Microsoft was informed right away. More than 30,000 confidential Microsoft Teams messages as well as private keys and passwords were exposed as a result of the hack. No customer data or other internal services have been compromised as a result of this incident, as stated by Microsoft’s Security Response Center team, who also informed customers that there was nothing they were required to do.

The series of occurrences were described in detail in a study released on Monday by Wiz Research, which is renowned for its proficiency in discovering data exposure situations. They came into a GitHub repository that belonged to Microsoft’s AI research unit while searching for improperly setup storage containers. Usually, this repository provides machine learning models for image identification along with open-source code. The repository’s URL for a Microsoft-owned Azure storage account had an excessively permissive Shared Access Signature (SAS) token, which led to the serious mistake.

Read-only to full-control access to Azure Storage resources is made possible using SAS tokens, which are signed URLs. The SAS token in this case was set up incorrectly with full-control rights. The Wiz Research team and potential malicious actors were both able to access, view, remove, and modify the files contained in the storage account due to this configuration error. Two former Microsoft employees’ personal computer backups were among the exposed information.

Over 30,000 internal Microsoft Teams messages from 359 Microsoft workers were included in these backups, along with secret keys and passwords for Microsoft services. When Microsoft learned about the incident on June 22, they acted quickly to stop external access to the storage account by removing the SAS token. On June 24, the business successfully stopped the leak. The Microsoft Security Response Center’s follow-up investigations verified there was no danger to customers as a result of the data disclosure. Microsoft provided suggestions to prevent similar occurrences in the future in their post-incident analysis.

These best practices include restricting rights to exactly what programs need and keeping SAS URLs to the bare minimum required resources. Additionally, users are urged to set SAS URLs to expire in no more than one hour. Microsoft promised to upgrade its detection and scanning capabilities in order to identify instances of over-provisioned SAS URLs more effectively after acknowledging the need for stronger security measures.

This event serves as a reminder that even computer giants are susceptible to human mistake and security failings and emphasizes the critical importance of correctly configuring security settings in cloud-based systems. Notably, this is not the sole safety issue Microsoft has had lately. In a separate incident from this one, in July, Chinese agents stole a secret Microsoft key and used it to access email accounts belonging to the U.S. government.

Wiz Research also contributed to the discovery of that security lapse. Microsoft’s dedication to enhancing its security procedures on a constant basis is still crucial in the face of changing threat environments.

A Misconfigured GitHub Repository

The Wiz Research Team’s continuing investigation into instances of cloud-hosted data being unintentionally made public sets the stage for the narrative. During their search for improperly configured storage containers, they came upon a Microsoft-affiliated GitHub repository called “robust-models-transfer” as part of their endeavours. This repository, which belonged to Microsoft’s AI research group, aimed to offer open-source code and image recognition AI models.

The repository’s main goal was to provide open-source models, but it also had a URL that allowed access to the full Azure storage account, not just the open-source models that were intended. This error unintentionally revealed a vast amount of additional personal information. According to Wiz Research, the compromised storage account contained a whopping 38 gigabytes of data, which consisted of personal computer backups that were owned by Microsoft workers.

This cache of confidential data included Microsoft service passwords, secret keys, as well as more than 30,000 internal Microsoft Teams messages from 359 workers. The severity of the event was increased by a misconfiguration of the SAS token, which permitted “full control” rights rather than read-only access. This meant that an unauthorized person could not only read the files in the storage account, but they could also delete or overwrite them.

The GitHub repository’s initial aim was to give AI models for training code, which makes this occurrence even more curious. Users were instructed to use the SAS link to download a model data file and include it into their scripts. These data files employed the TensorFlow library’s ckpt format and were structured with Python’s pickle formatter, which allows for arbitrary code execution.

This indicates that a potential attacker might have introduced harmful code into any AI models maintained within this storage account, possibly jeopardizing the security of every user who relied on Microsoft’s GitHub repository.

The Role of SAS Tokens in the Data Exposure

Understanding the role of SAS tokens in Azure is critical to understanding the fundamental cause of this issue. SAS tokens from Azure are signed URLs that provide access to Azure Storage data. Users can tailor the amount of access, with permissions ranging from read-only to full control, and the scope can include a single file, a container, or even an entire storage account. Users can also customize the expiration time, which includes the option for access tokens that never expire.

The most common type, account SAS tokens, were utilized in Microsoft’s repository. Account SAS token generation is a simple process that allows users to set the scope, rights, and expiry date of the token, which is subsequently signed with the account key on the client side. As emphasized in this instance, one of the main downsides of SAS tokens is that there is no built-in monitoring or tracking mechanism for these tokens within Azure.

As a result, administrators have no means of knowing the existence or circulation of a highly permissive and non-expiring token generated by a user. To revoke a token, you must first rotate the account key that signed the token, rendering all tokens signed by that key invalid. SAS tokens are a possible target for attackers attempting to access exposed data or maintain persistence in compromised storage accounts due to these particular flaws.

According to recent reports, attackers are using the lack of monitoring capabilities of SAS tokens to issue privileged SAS tokens as a backdoor. Effective detection and response to such situations are difficult given the lack of documentation surrounding the issuing of these tokens. This event serves as a clear reminder of the vital significance of adequately securing cloud resources and putting in place strong access controls, especially in settings where sensitive data is at risk.

The risks and vulnerabilities associated with technology change with time, necessitating continual awareness and proactive security measures on the part of both businesses and individuals. In Azure, SAS tokens are strong tools that let users share access to storage resources. These tokens do, however, carry built-in security vulnerabilities that can be examined from a variety of perspectives, including permissions, hygiene, management, as well as monitoring.

Permissions

One of the primary risks with SAS tokens lies in the level of access they can grant. SAS tokens can be configured with excessive permissions, including read, list, write, or delete access, or they can provide wide access scopes, allowing users to access adjacent storage containers. This flexibility, while convenient, can inadvertently lead to over-privileged tokens.

Hygiene

SAS tokens have an expiration problem. Our research and monitoring reveal that organizations often use tokens with exceedingly long lifetimes, sometimes even indefinite. This issue was evident in the Microsoft incident, where the SAS token remained valid until 2051. Extended token lifetimes can increase the exposure window for potential misuse or exposure.

Management and Monitoring

SAS account tokens are famously challenging to control and revoke. It is difficult to determine how many tokens have been issued and are currently in use because there is no official way to track these tokens within Azure. Additionally, since SAS token creation occurs on the client side, Azure does not keep track of it. The security issues are made worse by the inability to track token issuance.

The difficulty with revoking SAS tokens is similar. Revocation of the full account key, which invalidates any tokens signed with that key, is the sole workaround. Due to this lack of specificity, revocation is a laborious process. Another difficulty is keeping track of SAS token usage. It is necessary to enable logging on each storage account separately, which results in extra expenses dependent on the volume of requests.

SAS Security Recommendations

Addressing the security risks associated with SAS tokens requires a multifaceted approach:

Management

- Consider SAS Tokens as Sensitive as Account Keys: Given the difficulties in managing SAS tokens, businesses should treat them with the same level of sensitivity as the account key itself and refrain from sharing them with third parties.

- Use Service SAS with Stored Access Policies: For external sharing, consider using a Service SAS with a Stored Access Policy. This approach centralizes token management and revocation.

- Leverage User Delegation SAS for Time-Limited Access: Choose User Delegation SAS tokens when sharing content that necessitates time-limited access. These tokens have a 7-day expiration date and give the token’s creator as well as users control and visibility.

- Create Dedicated Storage Accounts for External Sharing: To limit the potential impact of over-privileged tokens, consider creating dedicated storage accounts for external sharing.

- Disable SAS Tokens as Needed: SAS access can be disabled for each storage account separately. To track and enforce this as a policy, consider adopting a Cloud Security Posture Management (CSPM) service.

- Block Access to “List Storage Account Keys” Operation: Blocking access to the “list storage account keys” operation in Azure can prevent new SAS token creation without the key.

Monitoring

- Enable Storage Analytics Logs: Enable Storage Analytics logs for every storage account to observe active SAS token usage. These logs contain information on SAS token access.

- Leverage Azure Metrics: Monitor SAS token consumption in storage accounts using Azure Metrics. Storage account events are recorded and aggregated by Azure, permitting users to identify storage accounts with SAS token usage.

Secret Scanning

In addition to the recommendations given above, businesses should employ covert scanning tools to look for exposed or overprivileged SAS tokens in a variety of publicly accessible assets, including websites, mobile apps, as well as GitHub repositories. As demonstrated by the Microsoft instance, this proactive approach can aid in the prevention of data exposure incidents.

Security Risks in the AI Pipeline

Last but not least, as businesses adopt AI more and more, security teams need to understand the inherent security threats at every stage of the AI development process. These dangers include the possibility of supply chain assaults brought on by inappropriate authorization and data oversharing. To create successful risk mitigation strategies for AI initiatives, collaboration across the security, data science, as well as research teams is essential.

In conclusion, the incident involving the Microsoft GitHub data disclosure serves as a sharp reminder of the security risks presented by SAS tokens. Organizations should put the suggested strategies into effect while remaining vigilant with their cloud security strategy in order to improve cloud security. Additionally, as the use of AI spreads, businesses need to prioritize AI security and set up definite rules to protect private information all the way through the AI development process.