Top 10 Data Lake Startups In 2026

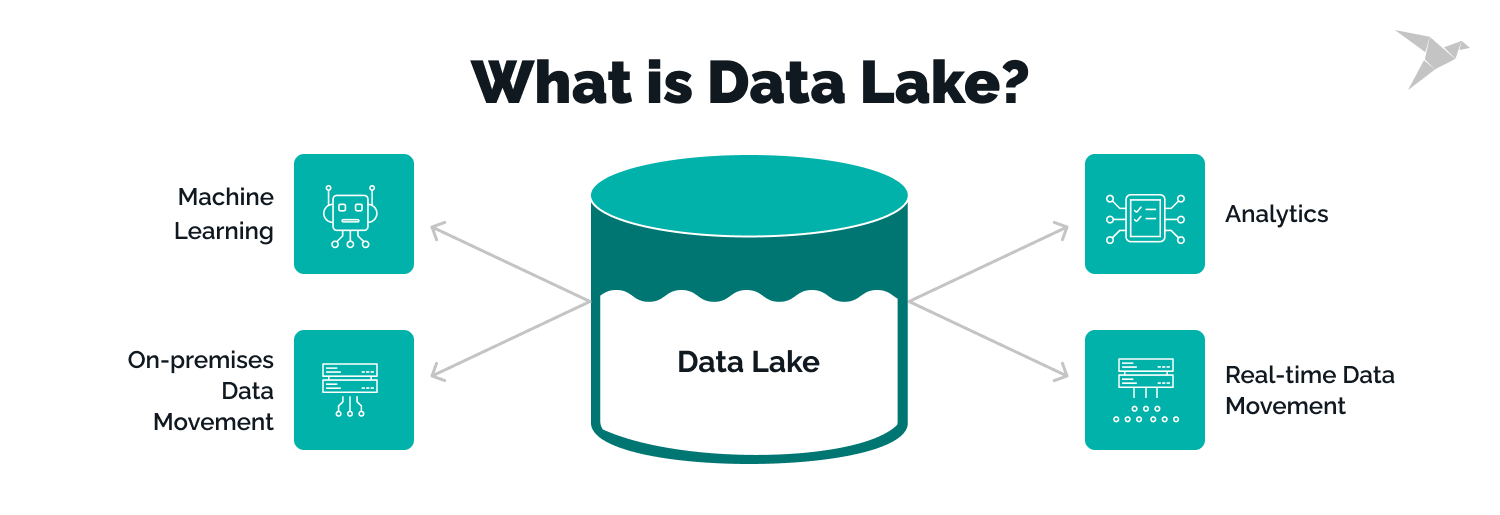

The data infrastructure landscape has undergone a profound transformation over the past decade, moving from the rigid constraints of traditional data warehouses toward more flexible architectures that can accommodate the explosive growth and diverse nature of modern data. At the center of this transformation are data lakes, repositories designed to store vast amounts of structured, semi-structured, and unstructured data in their native formats.

What makes the current moment particularly exciting is the emergence of innovative startups that are reimagining what data lakes can be, combining the flexibility and cost advantages of traditional data lakes with the query performance and governance capabilities previously only available in data warehouses. This convergence has given rise to the “lakehouse” architecture that represents the future of analytical data platforms.

1. Starburst: The Distributed Query Powerhouse

Starburst has emerged as one of the most successful data lake startups, having raised over four hundred fourteen million dollars across multiple funding rounds and achieving a valuation of three point three five billion dollars as of early 2022, firmly establishing it as a unicorn. Founded in 2017 by CEO Justin Borgman, formerly the co-founder and CEO of Hadapt which was acquired by Teradata, Starburst built its platform around Trino, the open-source distributed SQL query engine originally created at Facebook as Presto. This technological foundation gives Starburst the ability to query data across dozens of different data sources without moving or copying data, a capability that resonates strongly with enterprises struggling with data fragmentation across multiple systems.

The core value proposition Starburst delivers centers on data federation and virtualization. Organizations often have critical data scattered across various systems including cloud data lakes on AWS S3 or Azure Blob Storage, legacy on-premises data warehouses like Teradata or Oracle, modern cloud warehouses like Snowflake, operational databases including PostgreSQL and MySQL, and specialized systems for specific workloads. Traditional approaches require either extracting data into a central warehouse, which creates latency and storage costs, or accepting that comprehensive analysis across systems is practically impossible. Starburst enables SQL queries that span these diverse sources, presenting users with unified views despite the underlying data remaining in place.

The platform has evolved significantly beyond basic query federation. Starburst Galaxy, the company’s fully managed cloud offering, provides a lakehouse architecture built on Apache Iceberg that combines the query federation capabilities with native data lake storage and management. Users can store data in open formats on object storage while benefiting from features like ACID transactions, schema evolution, and time travel that Iceberg provides. The platform includes data discovery capabilities that automatically catalog available datasets, built-in governance ensuring appropriate access controls, and query optimization that dramatically improves performance compared to querying raw data files.

Starburst’s success reflects several strategic decisions that have proven prescient. By building on the open-source Trino project rather than proprietary technology, Starburst benefits from a vibrant community of contributors while avoiding vendor lock-in concerns that enterprises increasingly worry about. The company’s focus on serving large enterprises with complex, heterogeneous data estates positions it in a market segment willing to pay premium prices for capabilities that genuinely solve business problems. Recent acquisitions including Varada, which brought data indexing and caching technologies, have enhanced performance for the most demanding workloads.

The company faces competition from both established vendors like Databricks and Snowflake that offer some federation capabilities, and from fellow startups like Dremio that target similar use cases. However, Starburst’s significant funding, growing customer base including brands like Comcast, Grubhub, and DoorDash, and continued product innovation position it as a leader in the distributed query and lakehouse space as organizations increasingly adopt multi-cloud strategies requiring data access across diverse environments.

2. Dremio: The Data Lakehouse Pioneer

Dremio represents another major success story in the data lake startup ecosystem, having raised over four hundred twenty million dollars and achieved a two billion dollar valuation following its Series E funding round in early 2022. Founded in 2015 by Tomer Shiran and Jacques Nadeau, both veterans of the Apache Drill project at MapR, Dremio built its platform around the vision of enabling self-service analytics directly on data lake storage without requiring extraction into data warehouses. This vision has proven remarkably durable as the broader market has embraced the lakehouse architecture Dremio pioneered.

The company’s core technology centers on what it calls a “data lakehouse platform” that enables business intelligence and analytics tools to query data stored in object storage systems like Amazon S3 or Azure Data Lake Storage with performance comparable to traditional data warehouses. Dremio achieves this through several innovative technical approaches including an Apache Arrow-based columnar cache that dramatically accelerates query performance, automatic query acceleration through predicate pushdown and intelligent caching, semantic layer capabilities that provide business-friendly views of technical data structures, and native integration with leading BI tools including Tableau, Power BI, and Looker. The platform supports both batch and streaming workloads, making it suitable for real-time analytics use cases increasingly common as businesses demand more immediate insights.

What distinguishes Dremio in the marketplace is its emphasis on democratizing data access for business users rather than requiring constant data engineering intervention. Traditional approaches to data lake analytics required data engineers to prepare and model data before analysts could work with it, creating bottlenecks that slowed time-to-insight. Dremio’s semantic layer and self-service capabilities enable analysts to directly explore and analyze data lake contents using familiar SQL and BI tools, with the platform handling the complexity of reading various file formats, optimizing query execution, and caching frequently accessed data.

The company has invested heavily in supporting Apache Iceberg, the open table format that has emerged as the leading standard for lakehouse implementations. Dremio offers what it claims is the fastest query engine for Iceberg tables, with specific optimizations including automatic compaction, partition evolution, and metadata management that improve performance as data volumes scale. This tight integration with Iceberg ensures that customers are not locked into proprietary formats and can migrate to alternative platforms if needed, addressing a key concern for enterprises making long-term infrastructure decisions.

Dremio’s customer base spans diverse industries including financial services with clients like Swiss Re and KKR, technology companies such as FactSet and Nutanix, and numerous enterprises undergoing cloud data modernization initiatives. The company has demonstrated strong revenue growth, reportedly doubling revenue for several consecutive years, validation that its approach addresses genuine market needs. As artificial intelligence workloads increasingly drive demand for access to comprehensive data for model training and inference, Dremio’s capability to make data lakes queryable and accessible positions it well for continued growth.

3. Upsolver: The Real-Time Data Lake Automation Specialist

Upsolver has carved a distinctive niche in the data lake ecosystem by focusing specifically on streaming data and real-time analytics, addressing the reality that increasingly critical business processes require insights from continuously flowing data rather than batch-processed historical data. Founded with the recognition that traditional data lake approaches struggled with streaming data, Upsolver provides a platform that automates the complex engineering required to ingest, transform, and make streaming data immediately queryable in data lakes and lakehouses.

The platform’s core value proposition centers on eliminating the custom coding typically required for streaming data pipelines. Engineers building real-time data lake ingestion have traditionally needed to write and maintain complex code using frameworks like Apache Kafka, Apache Flink, or cloud-native streaming services. This code handles challenges including schema evolution as source data structures change, late-arriving data that complicates time-windowed aggregations, exactly-once processing semantics to prevent duplicates or data loss, and optimizing file sizes and formats for subsequent query performance. Upsolver abstracts these complexities behind a visual interface where users define desired transformations and outputs, with the platform generating and managing the underlying streaming infrastructure.

Upsolver’s technical architecture leverages cloud object storage as the foundation for both data persistence and processing state, an approach that provides several advantages including cost-effective storage at scale, elimination of specialized streaming infrastructure that can be expensive and complex to operate, and automatic recovery from failures without data loss. The platform supports ingestion from diverse streaming sources including Apache Kafka, Amazon Kinesis, Google Cloud Pub/Sub, and webhooks, and can output to various destinations including data lake storage, Snowflake, Amazon Redshift, Elasticsearch, and others. This flexibility enables organizations to build comprehensive real-time data architectures using Upsolver as the integration layer.

The company’s SQL-based transformation language enables analysts and data engineers to define streaming transformations using familiar syntax rather than learning specialized frameworks. Users can perform operations including filtering and enrichment of streaming records, time-windowed aggregations for metrics calculation, joining streaming data with static reference data, and change data capture processing for maintaining up-to-date copies of operational databases. These transformations execute continuously on incoming data, with Upsolver handling the complexity of maintaining state, managing checkpoints, and ensuring processing guarantees.

Upsolver’s focus on automation and ease of use positions it particularly well for organizations adopting real-time analytics without the deep streaming expertise that technologies like Flink require. While the platform may not offer the ultimate flexibility of writing custom code for the most complex scenarios, the majority of real-time use cases fit patterns that Upsolver can handle through configuration rather than coding. As more organizations recognize that batch processing creates unacceptable latency for critical business processes, Upsolver’s streaming-first approach addresses a growing segment of the data lake market.

4. Zetaris: The Distributed Intelligence Platform

Zetaris has emerged as an innovative player in the data lake space with its focus on what the company calls “modern lakehouse for AI,” positioning itself at the intersection of data management and artificial intelligence workloads. The Australia-based startup, which has been expanding globally including appointing cybersecurity entrepreneur Robert Herjavec as Executive Director for Global Strategy in 2025, addresses the reality that AI and machine learning initiatives often fail not due to algorithm limitations but because organizations struggle to access and prepare the diverse data these applications require.

The company’s Lightning Platform provides capabilities that span data virtualization enabling queries across distributed sources without data movement, automated data cataloging that discovers and classifies available datasets, semantic layer technology that provides business-friendly abstractions over technical schemas, and AI-specific features including model training pipeline integration and feature store capabilities for machine learning. This comprehensive scope distinguishes Zetaris from startups focused narrowly on query engines or storage management, positioning it as a complete platform for AI-driven organizations.

Zetaris’s data virtualization approach shares conceptual similarities with Starburst and Dremio but emphasizes real-time access to operational data sources alongside analytical data lakes. The platform can federate queries across transactional databases, SaaS applications, legacy systems, and modern data lakes, providing unified access for both traditional business intelligence and AI model training. This capability proves particularly valuable for organizations where critical data remains locked in operational systems that cannot be easily replicated into centralized repositories due to latency requirements, data volumes, or governance constraints.

The company’s strategic partnerships demonstrate its positioning at the cutting edge of data and AI convergence. Collaborations announced in 2025 include working with music streaming technology provider Tuned Global to turn streaming data into revenue through advanced analytics, and partnerships with AWS that position Zetaris within the broader cloud ecosystem. These partnerships provide both credibility and go-to-market channels that independent startups require to compete against established vendors with existing customer relationships.

Zetaris’s emphasis on AI readiness addresses a critical market need as organizations rush to implement generative AI and machine learning capabilities. These initiatives require access to comprehensive, high-quality data for both training and inference, often spanning multiple source systems. Zetaris’s ability to provide unified, real-time access to diverse data sources without requiring complex ETL pipelines or data replication positions it as an enabler of AI transformation. As enterprises recognize that data infrastructure limitations often represent the primary bottleneck in AI initiatives, platforms like Zetaris that specifically address AI data requirements should see growing demand.

5. iomete: The Apache Iceberg Innovator

iomete represents the new generation of data platforms built from the ground up on open lakehouse standards, specifically Apache Iceberg. The startup’s positioning emphasizes extreme value proposition with compute pricing equal to AWS on-demand rates without markups, essentially providing the platform layer for free while charging only for the underlying cloud resources consumed. This disruptive pricing model challenges established vendors that layer significant markups on top of cloud infrastructure costs.

The platform provides a comprehensive lakehouse environment including serverless Iceberg-based data lake storage, serverless Spark jobs for data processing and transformation, SQL editor for ad-hoc querying and exploration, advanced data catalog with metadata management and discovery, and built-in business intelligence capabilities with options to connect third-party tools like Tableau or Looker. This full-stack approach enables organizations to build complete analytical environments without assembling multiple vendor solutions.

What distinguishes iomete’s technical approach is its commitment to serverless architecture across all components. Users do not provision clusters or manage infrastructure, instead simply defining the workloads they need executed with the platform automatically scaling resources and shutting down when idle. This serverless model provides several advantages including elimination of idle resource costs that plague traditional cluster-based approaches, automatic scaling to handle workload variations without manual intervention, simplified operations with no infrastructure to manage or tune, and pay-per-use pricing that makes costs predictable and tied directly to business value generated.

The company’s focus on Apache Iceberg as the foundational technology proves strategic as Iceberg has emerged as the leading open table format for lakehouses. By building exclusively on Iceberg rather than supporting multiple formats, iomete can deliver deeper optimizations and tighter integration while maintaining compatibility with the broader ecosystem of Iceberg-compatible tools. Organizations adopting iomete are not locked into proprietary formats, as Iceberg tables remain accessible through any Iceberg-compatible query engine or processing framework.

iomete’s positioning targets organizations that want lakehouse capabilities without enterprise platform complexity or costs. The startup’s value proposition resonates particularly well with cloud-native companies, data teams within larger organizations seeking to establish independent analytical capabilities, and cost-conscious organizations for whom traditional enterprise platform pricing represents a barrier to adoption. While iomete may lack some enterprise features like advanced security integrations or dedicated support that large vendors provide, its combination of comprehensive functionality and aggressive pricing creates a compelling alternative for a significant market segment.

6. Qubole: The Cloud Data Platform Pioneer

Qubole stands as one of the earlier pioneers in cloud-based data lake platforms, having been founded before many competitors recognized the opportunity. The platform positions itself as providing data lake, data engineering, and analytics capabilities across multiple clouds with emphasis on automation and optimization that reduces the manual effort required to operate big data infrastructure at scale. While Qubole has raised substantial funding and achieved significant scale, it maintains startup agility and continues innovating in areas like autonomous workload optimization.

The platform’s multi-cloud architecture supports AWS, Microsoft Azure, and Google Cloud Platform, enabling organizations to avoid lock-in to specific cloud vendors while maintaining consistent data processing capabilities. This multi-cloud capability proves valuable for enterprises with complex cloud strategies, those operating in regulated industries where data residency requirements necessitate using multiple clouds, and companies hedging against vendor dependency. Qubole abstracts cloud-specific differences, providing unified interfaces for data processing regardless of underlying infrastructure.

Qubole’s autonomous platform capabilities leverage machine learning to optimize various aspects of data lake operations including automatically right-sizing compute clusters based on workload characteristics, predicting when to start clusters proactively before workloads begin, identifying opportunities to use lower-cost spot instances without compromising reliability, and detecting anomalies in data pipelines that might indicate quality issues. These autonomous capabilities reduce the specialized expertise required to operate data platforms efficiently, democratizing access to sophisticated optimizations previously available only to organizations with deep engineering bench strength.

The platform supports multiple processing engines including Apache Spark for general-purpose distributed computing, Presto for interactive SQL queries, Apache Hive for batch processing, and Airflow for workflow orchestration. This engine diversity enables organizations to select optimal tools for different workloads rather than forcing everything through a single processing paradigm. Qubole manages the complexity of operating these diverse engines, handling version upgrades, security patching, and integration between components.

While Qubole faces intense competition from both established cloud vendors offering native services and fellow startups with more modern architectures, the company’s years of operational experience have generated valuable insights embedded in its autonomous optimization capabilities. Organizations that value multi-cloud flexibility, automated operations, and support for diverse processing engines find Qubole’s platform delivers practical value that justifies its positioning in the market.

7. Dataleyk: The SMB Data Lake Democratizer

Dataleyk represents a different approach to the data lake market, targeting small and medium-sized businesses rather than enterprises and emphasizing simplicity and accessibility over comprehensive features. The startup’s mission centers on making big data analytics easy and accessible to organizations without specialized data engineering teams, recognizing that data lake benefits should not be limited to large enterprises with extensive technical resources.

The platform provides a fully managed cloud data lake that requires near-zero technical knowledge to deploy and operate. This managed approach handles infrastructure provisioning, security configuration, backup management, and routine maintenance, allowing SMBs to focus on deriving insights from their data rather than operating complex infrastructure. Dataleyk’s setup process guides users through connecting various data sources including operational databases, SaaS applications, CSV files, and APIs, with the platform handling schema discovery and data ingestion automatically.

The SQL-based exploration interface enables analysts familiar with basic SQL to query data without learning specialized big data technologies or programming languages. Users can write queries spanning multiple data sources that Dataleyk has ingested, with the platform optimizing execution and presenting results through either its built-in visualization capabilities or integration with existing business intelligence tools. This SQL-centric approach lowers the barrier to adoption as SQL skills are widespread, unlike the specialized knowledge required for technologies like Spark or Flink.

Dataleyk’s security approach encrypts all data at rest and in transit, provides role-based access controls, and offers compliance with common data protection regulations, capabilities that SMBs often struggle to implement on their own. The platform operates on subscription-based pricing designed for SMB budgets, typically starting at modest monthly fees that scale based on data volumes and user counts. This pricing makes data lake capabilities accessible to organizations for whom enterprise platform costs would be prohibitive.

The SMB market that Dataleyk targets represents a substantial opportunity as smaller organizations increasingly recognize data as a strategic asset but lack the resources to implement complex infrastructure. Dataleyk’s simplicity-first approach trades some flexibility and advanced features for dramatically easier adoption and operation. For SMBs whose analytics needs fit within Dataleyk’s capabilities, the platform provides professional data lake functionality without enterprise complexity or costs.

8. Firebolt: The Extreme Performance Cloud Warehouse

While Firebolt positions itself more as a cloud data warehouse than a pure data lake platform, its architecture and capabilities warrant inclusion as it represents an important approach to high-performance analytics on data lake storage. The Israel-based startup, founded by veterans of enterprise software companies, built Firebolt around the premise that cloud data warehouses could deliver dramatically better performance, measured in orders of magnitude, compared to established vendors by rethinking architecture from first principles for cloud environments.

The platform’s serverless architecture separates compute from storage with data persisting in low-cost object storage compatible with data lake formats. This separation provides several advantages including independent scaling of storage and compute resources, elimination of data copies that traditional architectures require, pay-per-use pricing with no idle compute costs, and the ability to run multiple workloads against the same data without duplication. Compute engines can scale elastically based on query demands, spinning up when queries arrive and terminating when idle.

Firebolt’s performance claims border on dramatic, with the company asserting order-of-magnitude improvements over competitors in query execution times. These performance gains derive from several technical innovations including proprietary data indexing that dramatically reduces the volume of data scanned for queries, vectorized query execution that leverages modern CPU capabilities for parallel processing, and aggressive use of compression and encoding optimized for analytics workloads. The platform can analyze much larger data volumes at higher granularity with faster response times than traditional approaches.

The startup’s positioning targets organizations with performance-critical analytics requirements where query latency directly impacts business operations or user experience. Use cases include customer-facing analytics embedded in applications where query latency affects user experience, real-time operational dashboards supporting time-sensitive decisions, ad-hoc exploration of massive datasets where traditional platforms deliver unacceptably slow responses, and intensive analytical workloads like fraud detection requiring rapid processing of large data volumes. For these demanding scenarios, Firebolt’s performance advantages can justify migration despite the effort of moving from existing platforms.

Firebolt competes against both established cloud warehouses like Snowflake and Databricks, and fellow startups with different architectural approaches. The company’s focus on extreme performance rather than comprehensive features creates clear differentiation, though this specialization also means Firebolt may not address all requirements of platforms with broader scope. As organizations increasingly demand real-time insights and struggle with the costs of processing ever-larger data volumes, Firebolt’s performance-first approach addresses genuine pain points in the market.

9. e6data: The Cost-Optimized Query Engine

e6data represents an emerging player in the data lake startup ecosystem, positioning itself as a query engine optimized specifically for cost efficiency alongside performance. The India-based startup addresses the reality that cloud data warehouse and query engine costs can become prohibitive as data volumes and query workloads scale, with many organizations reporting that analytics infrastructure represents a growing percentage of their cloud expenditure. e6data’s architecture aims to deliver comparable or better performance while dramatically reducing infrastructure costs.

The platform’s technical approach centers on efficient resource utilization and intelligent query optimization. Rather than brute-forcing query performance through massive compute resources, e6data employs sophisticated techniques to minimize resource consumption including adaptive query execution that adjusts plans based on runtime statistics, efficient handling of complex joins that often dominate query costs, intelligent caching and materialization of intermediate results, and support for diverse storage formats with format-specific optimizations. These techniques combine to reduce the compute resources required for given workloads, directly translating to cost savings.

e6data’s integration with major cloud platforms and data lake storage systems enables organizations to query existing data without migration or duplication. The platform works with data stored in Amazon S3, Azure Data Lake Storage, Google Cloud Storage, and on-premises storage systems, reading common formats including Parquet, ORC, Avro, and CSV. This compatibility means organizations can adopt e6data as a query layer over existing data lakes without disrupting current architectures or processes.

The startup’s positioning emphasizes transparent, predictable pricing that organizations can understand and budget for, contrasting with some cloud platforms where costs can vary dramatically based on usage patterns and resource consumption. By focusing specifically on query execution rather than providing a full platform including storage management, workflows, and governance, e6data maintains a focused value proposition that addresses a specific pain point without expanding scope beyond core competencies.

As organizations grapple with cloud cost optimization amid economic pressures, query engines like e6data that promise comparable functionality at significantly reduced costs attract attention. The startup’s India base also positions it well to serve the rapidly growing Indian market where cost sensitivity often outweighs feature preferences. While e6data faces competition from both established vendors and well-funded startups, its cost optimization focus provides clear differentiation in an increasingly crowded market.

10. Datazip: The Modern Data Integration Platform

Datazip approaches the data lake ecosystem from the perspective of data integration and workflow management, recognizing that populating and maintaining data lakes requires robust capabilities for extracting data from source systems, transforming it appropriately, and loading it into target destinations. The startup provides a no-code platform for building and managing data pipelines, data warehouses, integrations, visualizations, and collaboration, emphasizing accessibility for users without deep technical backgrounds.

The platform’s no-code approach enables business analysts and citizen developers to build data pipelines through visual interfaces rather than writing code. Users select source systems from Datazip’s library of pre-built connectors, define transformations through drag-and-drop interfaces, and specify destination systems, with the platform generating and executing the underlying data movement and transformation logic. This democratization of data engineering capabilities addresses the bottleneck created when scarce data engineering resources cannot keep pace with organizational demand for new data integration pipelines.

Datazip’s database version control capabilities provide software development rigor to data platform management, enabling teams to track changes to schemas and pipelines, roll back problematic changes, collaborate on data platform development through branching and merging, and maintain audit trails of who made what changes when. These capabilities address the reality that data platforms evolve continuously as business requirements change, and managing this evolution requires systematic approaches rather than ad-hoc modifications.

The platform includes built-in visualization and business intelligence capabilities alongside integration and warehousing, creating an integrated environment where data flows from sources through transformation and storage to consumption through dashboards and reports. This integration eliminates the context switching between tools that can slow analytical workflows, though organizations with sophisticated BI requirements may still prefer specialized tools like Tableau or Looker that offer more advanced capabilities.

Datazip’s focus on simplifying data lake population and maintenance addresses a critical challenge, as many organizations find that building initial data lakes proves easier than maintaining them as source systems evolve, requirements change, and data quality issues emerge. The platform’s no-code approach and workflow automation capabilities reduce the ongoing effort required to keep data lakes current and valuable. While Datazip competes with established data integration vendors and other startups in a crowded market, its integrated approach spanning ingestion, warehousing, and visualization provides differentiation for organizations seeking consolidated platforms rather than best-of-breed point solutions.

The Future of Data Lake Innovation

As the data infrastructure landscape continues evolving through 2026 and beyond, several trends are reshaping opportunities for startups in this space. The convergence of data lakes and data warehouses into lakehouse architectures has become the dominant pattern, with virtually all serious startups building on open table formats like Apache Iceberg rather than proprietary storage layers. This standardization on open formats reduces switching costs and vendor lock-in, potentially accelerating customer willingness to adopt startup platforms.

Artificial intelligence workloads are fundamentally changing data platform requirements, with model training demanding access to massive volumes of diverse data, inference requiring real-time access to up-to-date information, and model monitoring necessitating comprehensive observability. Startups that successfully position their platforms as AI-ready infrastructure, like Zetaris with its explicit AI focus, may capture disproportionate value as AI adoption accelerates across industries. The integration of data platforms with AI model development, deployment, and governance tools will likely deepen as these capabilities become inseparable.

Real-time and streaming analytics requirements continue intensifying as businesses demand more immediate insights to support operational decisions. The batch processing paradigm that characterized first-generation data lakes increasingly gives way to continuous processing that makes data available for analysis with minimal latency. Startups like Upsolver that specialize in streaming data lake ingestion address this trend directly, while broader platforms must incorporate streaming capabilities to remain competitive.

Cost optimization has emerged as a critical priority as cloud bills grow and economic pressures force organizations to justify every dollar of technology spending. Startups that can deliver comparable functionality at dramatically lower costs, whether through technical innovation like e6data’s efficient query execution or business model innovation like iomete’s no-markup pricing, address genuine pain points. The transparency of cloud costs makes the value proposition of cost-optimized solutions immediately quantifiable, potentially accelerating sales cycles.

The startups profiled in this article represent diverse approaches to data lake innovation, from well-funded unicorns like Starburst and Dremio with comprehensive platforms and enterprise go-to-market strategies, to focused specialists like Upsolver addressing streaming use cases, to accessible platforms like Dataleyk democratizing capabilities for SMBs. Each has identified specific market segments and pain points where their distinctive approaches create genuine value. As the data infrastructure market continues its remarkable growth driven by digital transformation, cloud adoption, and AI initiatives, these innovative startups will play essential roles in shaping how organizations store, process, and derive insights from the ever-expanding volumes of data that increasingly define competitive advantage in the modern economy.