10 Steps to Implement Artificial Intelligence in your Business for Growth

10 Steps to Implement Artificial Intelligence in your Business for Growth

The technology industry is in awe of artificial intelligence (AI). Large enterprises integrate AI across the entire tech stack, with virtual assistants and chatbots embedded in their solutions. Approximately 33.2% annual growth rate is predicted for the global AI market by 2025, according to a recent report.

Businesses can automate various processes with artificial intelligence to save time, improve productivity, and free up employees’ time.

Automation can help you achieve greater output in less time at a lower cost by automating repetitive tasks.

Business operations can be sped up with artificial intelligence, and employees can focus on more critical tasks as a result.

A company’s greatest asset is its people. Artificial intelligence (AI) could help them save time by automating some of their less-important and time-consuming tasks.

Our existing solutions are becoming even smarter with artificial intelligence, which allows us to leverage data more effectively. Embedding machine learning algorithms, computer vision, natural language processing, and deep learning into any platform or solution has never been easier.

A company’s critical business processes can be disrupted by Artificial Intelligence, including collaboration, control, reporting, and scheduling. This blog aims to discuss ways to implement AI efficiently and effectively within an organization.

- Understand and research

To begin, you need to become familiar with enterprise artificial intelligence. Additionally, you can consult with pure-play AI companies for advice on how to approach this, as well as familiarize yourself with the wealth of online information available. Online papers and videos are available at some universities on AI techniques, principles, etc.

In addition to Microsoft’s open-source Cognitive Toolkit, Google’s TensorFlow software library, AI Resources, AAAI Resources, MonkeyLearn’s Gentle Guide to Machine Learning, and other paid and free resources, your IT team can look into Microsoft’s open-source Cognitive Toolkit, Google’s TensorFlow software library, and the Association for the Advancement of Artificial Intelligence’s Resources.

With more research, you will be better prepared for your organization’s upcoming endeavour, know how to prepare for it, and understand what to expect at the end.

- Determine the use case

Next, identify what you want AI to do for your business once you know what AI can do. Figure out how you can incorporate AI into your products and services. Invest some time and effort into building specific use cases to help your business overcome some of its challenges. Consider, for instance, an existing tech program and its challenges. If you can demonstrate how image recognition, ML, or other technologies can fit into it and improve the product, you will make a compelling case.

- The financial value of an attribute

Analyze the business impact of those use cases and project the financial value of the AI initiatives. Keeping AI initiatives connected to business outcomes will ensure that you’re never lost in details and can always keep the end in mind. Next, prioritize your AI efforts. You will have a clear picture of which initiatives to pursue first if you put them all into a matrix of potential and complexity.

- Recognize skill gaps

It’s time to make sure you have enough supplies in the pantry now that you have prioritized your Artificial Intelligence initiatives. Wanting to accomplish something is one thing, but having organizational skills is quite another. Assessing your internal resources, identifying skill gaps, and deciding on a course of action before launching a full-blown AI implementation will help you plan. A pure-play product engineering company that specializes in AI may be able to work with you on this.

- SMEs guide the pilot phase.

Business owners should start developing and integrating AI within the business stack as soon as they are ready. Keep a project mindset, and always keep your business goals in mind. To ensure that you are on track, you might consult with subject matter experts in the field or external AI consultants. Pilot programs will provide a taste of what will be involved in implementing an AI solution in the long run.

If the pilot goes well, the case for your business will become even stronger, and you can decide whether it still makes sense. To succeed, you will need a team of people from your company and people knowledgeable about AI. At this stage, consulting with SMEs or other outside professionals is highly valuable.

- Data-massaging

AI/ML implementations are successful when they are based on high-quality data. Data must be cleaned, manipulated, and processed to yield better results. Enterprises typically store data in a variety of systems and silos. For example, create a small, cross-functional unit to combine different data sets, resolve discrepancies, and ensure quality data is produced.

- Taking baby steps

Starting small is the best way to go. Test AI thoroughly on a small data set. Increasing volume incrementally and collecting feedback continuously is the best option.

- Prepare a storage plan.

If you want to implement a full-blown data input solution with complete storage, you will have to begin considering additional storage when you have your small data set up and running. The algorithm must perform well as well as be accurate. A high-performance data management solution supported by fast and optimized storage is required to manage massive amounts of data.

- Managing Change

The benefits of AI include better insight and automation. The change is not easy for employees as they are expected to operate differently. Change is never easy for all employees, and some are more hesitant than others. The new AI solution that augments their daily tasks requires a formal change management initiative.

- Build efficiently and securely

In most cases, companies develop AI solutions that focus on specific challenges or aspects without analyzing the limitations and solution requirements for the entire solution. Suboptimal or dysfunctional solutions may result, as well as insecure solutions. To achieve an optimal computing experience, you need to balance storage, graphics processing unit (GPU), and network.

Companies are also mostly unaware of security, and they realize this after a project is implemented. Ensure your system is protected with data encryption, VPNs, malware blockers, etc.

It is not a walk in the park to implement artificial intelligence. The most challenging issue is overcoming the adoption challenges as with any technology. Introducing any new technology requires data literacy and trust. In addition to maturing with your data management strategy, AI initiatives should be a strategic component of your organization. The key to success is to have them running simultaneously.

What is artificial intelligence (AI)?

![What is Artificial Intelligence: Types, History, & Future [2022] | Simplilearn](https://i.ytimg.com/vi/ad79nYk2keg/maxresdefault.jpg)

A computer system that simulates human intelligence is called artificial intelligence. Machine vision, natural language processing, expert systems, and natural language processing are all included among the specific applications of AI.

AI: How does it work?

Vendors have scrambled to pitch how their services and products take advantage of AI as the hype surrounding the technology has grown. Machine learning is often just one component of AI, and to write and train machine learning algorithms, AI needs specialized hardware and software. The algorithms of AI are not limited to a single programming language, but Python, R and Java are some of the most popular.

AI systems typically predict future states by analyzing huge quantities of labelled training data. These data are checked for patterns and correlations, and the predictions are then based on these patterns. Then, the chatbot can learn to produce real-life exchanges between people based on hundreds of thousands of examples, or an image recognition tool will be able to identify and describe objects in images based on millions of examples.

Learning, reasoning, and self-correction are three cognitive skills that AI programming emphasizes:

- The learning process:

AI programming in this area aims to turn data into actionable information by creating rules for how to process it. Computer systems use algorithms to provide step-by-step instructions for performing specific tasks.

- Reasoning processes:

AI programming deals with selecting the right algorithm to achieve the desired result.

- The self-correcting process consists of the following:

AI programming is intended to improve algorithms continuously so they deliver accurate results.

Artificial intelligence: why is it so important?

AI is important because of the insights AI can provide enterprises, which they likely weren’t aware of previously, and its ability to perform certain tasks at a higher level than humans. Especially when it comes to repetitive, details-focused tasks, such as analyzing large quantities of legal documents to ensure relevant fields are filled in correctly, AI tools often deliver work quickly and almost error-free.

This has been a dramatic improvement in efficiency among some large enterprises and opened up entirely new revenue streams. A world leader in the taxi industry, Uber connects passengers with taxis by using computer software to connect passengers with taxis.

It would have been almost impossible before the advent of artificial intelligence to connect riders to taxis. With sophisticated machine learning algorithms, it predicts when people will need a ride in a certain area, which can be used to dispatch drivers before they are needed proactively.

With the help of machine learning, Google is, for instance, one of the largest providers of a range of online services. Sundar Pichai, Google’s CEO, declared the company would operate as an “AI first” organization in 2017.

AI has been used to improve the operations of today’s largest and most successful companies, allowing them to gain a competitive advantage over their rivals.

Artificial intelligence has both advantages and disadvantages.

Ai technologies, such as artificial neural networks and deep learning AI, are rapidly advancing because AI processes vast amounts of data much faster than humans and makes more accurate predictions.

Artificial intelligence applications that use machine learning can take the massive amount of data generated daily and quickly turn it into useful information. It is expensive to process the large amounts of data that AI programming requires, which is the primary disadvantage of using AI.

Benefits:

- Focused on details;

- Data-intensive tasks are reduced in time;

- Consistently delivers results;

- Agents powered by artificial intelligence are always available.

Negatives:

- Costly;

- Technically complex;

- The availability of qualified workers for the development of AI tools is limited;

- Does not know what it has seen; and

- Lack of generalization between tasks.

AI strength versus AI weakness

Weak or strong artificial intelligence can be categorized.

- AI systems that are weak are often described as narrow AI systems. Weak artificial intelligence is used in industrial robots and virtual assistants, such as Apple’s Siri.

- Programming with strong AI, or artificial general intelligence (AGI), can mimic the cognitive abilities of humans. AI systems that use fuzzy logic can find solutions autonomously when presented with unfamiliar tasks by applying knowledge from one domain. The Chinese room test and a Turing Test are two ways to test if a program can perform intelligently.

Artificial intelligence consists of four types.

In a 2016 article, Arend Hintze, an assistant professor at Michigan State University of integrative biology and computer science and engineering, discussed AI as being categorized into four types, starting with the task-specific intelligent systems in use today and moving toward sentient systems, which are yet to be invented. Here are the categories:

- Type 1: Reactive machines:

Artificial intelligence systems such as these are task-specific and have no memory. During the 1990s, IBM’s Deep Blue computer program defeated Garry Kasparov at chess. Because Deep Blue doesn’t remember its experiences, it cannot use past ones to indicate future ones, and it can identify pieces on a chessboard and make predictions.

- Type 2: Limited memory:

Considering that these AI systems have memory, they can draw on their past experiences to guide their future actions. The methods used by self-driving cars are based on this concept.

- Type 3: Theory of mind:

The mentality is referred to as psychology. In the event of social intelligence being applied to artificial intelligence, a system should understand emotions. Artificial intelligence systems should infer human intentions and predict behaviour, which is fundamental for AI systems to work as part of human teams.

- Type 4: Self-awareness:

A consciousness-based AI system has a sense of self, which makes it conscious. A self-aware machine understands its state, and such an AI is not yet in existence.

How is AI technology used today, and what are examples?

Various types of technology incorporate artificial intelligence. Some examples include:

Automation: Automation tools can be used in conjunction with AI technologies to perform a greater volume of tasks. Robotic process automation (RPA) is software that automates repetitive business processes using rules. RPA can automate a greater share of enterprise jobs when combined with machine learning and emerging AI tools, allowing tactical RPA bots to use AI intelligence and adapt to process changes.

Machine learning: A computer can act without programming if this science is applied to it. Deep learning can be seen as an extension of predictive analytics, and deep learning is a subset of machine learning. Machine learning algorithms fall into three categories:

- Data sets are labelled so that patterns can be spotted and used to label new data sets through supervised learning.

- Data sets aren’t labelled and are sorted according to similarities or differences in unsupervised learning.

- The AI system receives feedback after performing several actions, and the data set isn’t labelled.

Machine vision: A machine that uses this technology can see. Machine vision captures and analyses visual data using a camera, digital signal processing, and analogue-to-digital conversion. Although machine vision is often compared to human vision, it is not bound by biology, and it is capable of seeing through walls, for example. This technology can be used for various purposes, including medical image analysis and signature identification. Many people confuse computer vision and machine vision, which deal with image processing.

Natural language processing (NLP): A computer program processes human language. Spam detection is a popular use of NLP, which determines whether an email is a junk by looking at the subject line and text of the message. Machine learning is used in NLP today. Examples include word-to-word translation, sentiment analyses, and speech recognition.

Robotics: Robotics is a field of engineering that focuses on the design and manufacture of robots. Humans often find it difficult to complete tasks or do them consistently, so they use robots. NASA uses robots to move large objects in space or on assembly lines for automobile production. As part of their research, researchers are also building robots capable of interacting in social situations.

Self-driving cars: The main goal of developing autonomous vehicles is to build technology capable of keeping the vehicle within a given lane and avoiding unexpected obstacles, such as pedestrians. This technology is based on computer vision, image recognition and deep learning.

Artificial intelligence: what are its applications?

A wide variety of markets have embraced artificial intelligence. Below are nine examples:

- AI in healthcare:

Improvements in patient outcomes and cost reductions are the most important bets. Machine learning is being applied to diagnose disease better and faster than humans. IBM Watson is perhaps the most famous of these technologies.

Through its understanding of natural language, it can answer questions from the user. A hypothesis is formed by mining patient data and other available data sources, then presented along with a confidence score.

Using artificial intelligence for other purposes includes using online virtual assistants to assist patients and healthcare customers in finding health information, scheduling appointments, understanding the billing process, and completing other administrative tasks. As a result of AI technology, pandemics such as COVID-19 can also be predicted, fought, and understood.

- AI in business:

Customer relationship management (CRM) and analytics platforms are integrating machine learning algorithms to uncover how to serve customers better. Customer service is provided through chatbots incorporated into websites. Researchers and IT analysts are also talking about the automation of job positions.

Customer relationship management (CRM) and analytics platforms are integrating machine learning algorithms to uncover how to serve customers better. Customer service is provided through chatbots incorporated into websites. Researchers and IT analysts are also talking about the automation of job positions.

- AI in education:

Educators can save time by automating grading. The program adapts to the student’s needs, helping them progress at their own pace. Tutors can help students stay on track by providing additional support. Students’ learning environments may change, and some teachers may be replaced.

- AI in finance:

Intuit Mint and TurboTax, among others, use artificial intelligence to revolutionize personal finance applications. Applications like these are used to collect and analyze personal financial data. Other programs, including IBM Watson, have simplified buying a home. Many Wall Street traders are now managed by artificial intelligence software.

- AI in law:

It is often difficult for humans to sift through documents during the discovery process in law. Automating labour-intensive legal processes with artificial intelligence can save time and improve client service. Law firms use computer vision and natural language processing to classify and extract information from documents, as well as machine learning for describing data and predicting outcomes.

- AI in manufacturing:

Robots have been incorporated into the manufacturing workflow for a long time. In warehouses, factory floors, and other workplaces, industrial robots, formerly programmed to perform single tasks and separated from human workers, now act as cobots: smaller, multitasking machines that work with humans and perform more jobs, sometimes even in collaboration with them.

- AI in banking:

Banking institutions increasingly utilize chatbots to inform clients about services and handle transactions without involving humans. Compliance with banking regulations is made easier and more cost-effective with AI virtual assistants. As part of their decision-making process, banks use AI to set credit limits, determine investment opportunities, and improve loan approvals.

- AI in transportation:

AI technology in transportation is expanding beyond autonomous vehicles. Technology-based AI manages traffic, predicts flight delays, and improves ocean shipping efficiency and safety.

- Security:

Machine learning and AI are among the top buzzwords security vendors use today to differentiate their products. The technologies they entail are also extremely practical. For instance, security information and event management (SIEM) software and related solutions use machine learning to detect anomalies and identify suspicious activities that may indicate security threats.

AI can detect new and emerging attacks much more quickly than human employees and previous technologies by analyzing data and applying logic to detect similarities with previously known malicious code. Cyber attacks are being thwarted largely due to the maturing technology.

Augmented intelligence vs artificial intelligence

According to industry experts, artificial intelligence is associated with popular culture, which has led to unrealistic expectations about how AI can make a difference in the workplace and everyday life.

- Augmented intelligence: The term augmented intelligence, which has a more neutral connotation, may help researchers and marketers make the point that most applications of AI will be weak and will improve products and services rather than replace them. An example would automatically highlight important information in legal filings or business intelligence reports.

- Artificial intelligence: Artificial general intelligence, or AI, is closely associated with the concept of the technological singularity – a future controlled by machine intelligence that will far outperform the human brain in understanding it and how it will shape our reality. Even so, developers are trying to solve this problem, though it remains in the realm of science fiction. The term AI should describe this type of general intelligence since technologies like quantum computing might play a significant role in making it a reality.

Artificial intelligence and its ethical use

Despite AI tools providing new functionality for businesses, artificial intelligence also raises ethical concerns since these systems reinforce what they have already learned for better and worse.

In turn, that can pose a problem because many of the most advanced AI tools rely heavily on machine learning algorithms that can only work with data provided to them during training. Machine learning biases are inherent in machine learning because human beings select which data to train the program.

Machine learning should be incorporated into real-world, in-production systems with an eye toward ethics, and bias should be avoided. Deep learning and generative adversarial networks (GAN) applications, which are AI algorithms that are inherently unexplainable, fall into this category.

AI’s explainability could pose a stumbling block for industries that operate with strict regulatory compliance requirements. Financial institutions in the United States, for instance, are required to explain their decision to extend credit. The AI tools that make such decisions operate by teasing out subtle correlations between thousands of variables, so explaining how they refused credit can be difficult. Often referred to as black-box AI, programs cannot define how they make decisions.

Though AI tools potentially pose risks, few laws currently regulate their use, and even where laws exist, they often indirectly affect AI. Financial institutions, as described above, must explain credit decisions to potential customers in line with the United States Fair Lending regulations. In this way, lenders are restricted from using deep learning algorithms, which are opaque and inexplicable by nature.

EU General Data Protection Regulation (GDPR) imposes strict regulations on how enterprises can use consumer data, making it difficult for AI applications to be trained and function.

A National Science & Technology Council report attempted to examine the role of government regulation in AI development in October 2016 but made no specific recommendation for legislation.

In part, AI encompasses a variety of technologies used for a variety of ends, and in part, AI laws can limit the development of AI. Another obstacle to forming meaningful AI regulation is the rapid development of AI technologies, and New applications and technological advances could render existing laws obsolete immediately.

As an example, current laws that govern the privacy of conversations and recordings of conversations do not protect the confidentiality of voice assistants such as Amazon’s Alexa and Apple’s Siri because they gather a conversation but do not release it, except to their technology teams, which use it to improve machine learning algorithms. Laws enacted by governments to regulate AI do little to prevent criminals from exploiting the technology.

Artificial Intelligence and cognitive computing

Occasionally, the terms artificial intelligence and cognitive computing are used interchangeably. In most cases, however, AI refers to machines that replace human intelligence by simulating how we sense, learn, process, and react to information in our surroundings.

A product or service that mimics and augments human thought is called cognitive computing.

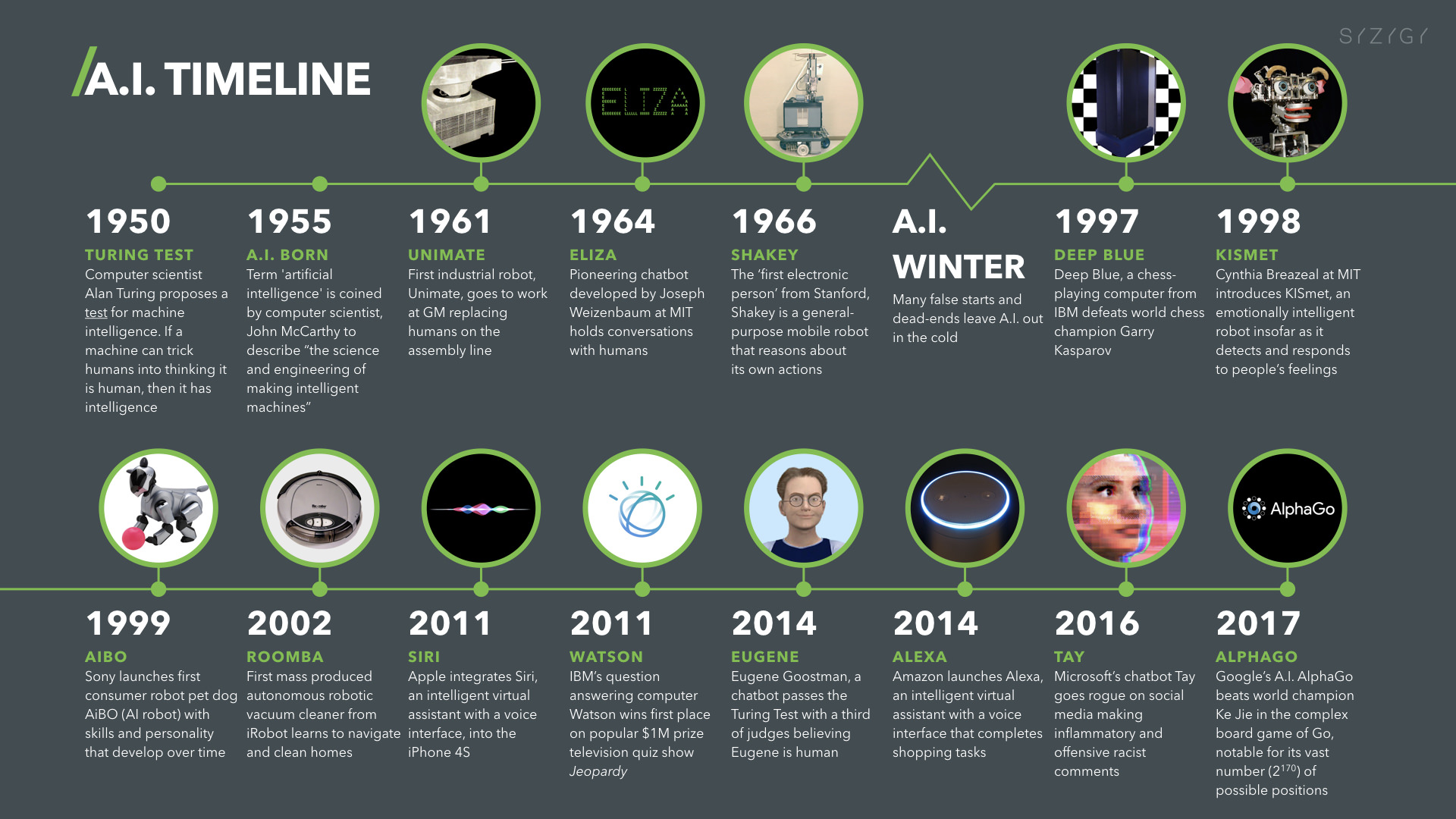

What is the history of AI?

The concept of inanimate objects being endowed with intelligence dates back thousands of years. Hephaestus was portrayed in myths as creating servants that resembled robots from gold. Priests animated god statues in ancient Egypt, which engineers built.

In the form of symbols, thinkers from Aristotle to the Spanish theologian Ramon Llull in the 13th century to René Descartes and Thomas Bayes laid the foundation for AI concepts such as general knowledge representation through their use of tools and logic the time.

As early as the late 19th century and the first half of the 20th century, the foundations for the modern computer were being laid. The Countess of Lovelace and Charles Babbage of Cambridge University created the first programmable computer in 1836.

The 1940s. Princeton mathematician John Von Neumann invented the stored-program computer, which stores its programs and data in its memory. And Warren McCulloch and Walter Pitts laid the foundation for neural networks.

The 1950s. Modern computers allowed scientists to test their theories about machine intelligence. Alan Turing, a British mathematician and World War II codebreaker, invented a method for determining whether the computer is intelligent. Computers were required to fool interrogators into believing their responses to their questions were derived from human thought.

1956. This year, an artificial intelligence conference at Dartmouth College is widely cited as the beginning of the modern field of artificial intelligence. Ten of the world’s leading experts, including AI pioneer Marvin Minsky, Oliver Selfridge, John McCarthy, the man who coined the term synthetic intelligence, attended the conference sponsored by DARPA.

Computer scientist Allen Newell and economist, political scientist, and cognitive psychologist Herbert A. Simon was also in attendance. They presented the first AI program, the Logic Theorist, a computer program capable of proving certain mathematical theorems.

The 1950s and 1960s. Leaders in the fledgling field of artificial intelligence predicted that the human brain would soon be replaced by artificial intelligence, attracting support from government and companies alike.

A substantial amount of well-funded research led to significant advances in basic AI: For example, in the late 1950s, Newell and Simon published the General Problem Solver (GPS) algorithm, which did not solve complex problems, but laid the foundation for developing more sophisticated cognitive architectures; McCarthy developed the Lisp language, which is still used to program AI systems today. Prof. Joseph Weizenbaum developed ELIZA in the mid-1960s, laying the groundwork for chatbots today.

The 1970s and 1980s. Although AI has long been a dream, it is nowhere near being realized, partly because of the limitations of computer memory and processing and partly because of the task’s difficulty.

The government and businesses stopped funding AI research during the “AI Winter” from 1974 to 1980. Later, the industry adopted Edward Feigenbaum’s expert systems, which sparked a new wave of AI enthusiasm. But again, government funding and industry support collapsed, and it ended in the mid-1990s after a second AI winter.

From the 1990s to the present. In the late 1990s, increased computational power and an explosion of data sparked a renaissance in artificial intelligence that continues today. As AI is increasingly being used for natural language processing, computer vision, robotics, machine learning, deep learning and others, there have been breakthroughs in these fields.

AI is becoming tangible in many ways, including driving vehicles, diagnosing diseases, and playing a significant role in popular culture. Garry Kasparov became the first to be defeated by a computer program when IBM’s Deep Blue beat him in 1997.

The public was captivated when IBM Watson defeated two former champions on Jeopardy! 14 years later. AlphaGo, a machine developed by Google DeepMind, shockingly defeated 18-time World Go champion Lee Sedol, marking a huge milestone in developing intelligent machines.

AI as a service(AIaaS)

Many vendors use artificial intelligence (AI) components in their standard offerings or provide access to AIaaS platforms since hardware, software, and staffing costs can be expensive. With AIaaS, individuals and companies can experiment with AI for various business purposes and evaluate several platforms before committing.

edited and proofread by nikita sharma